The University of Michigan-Shanghai Jiao Tong University Joint Institute (UM-SJTU JI, JI hereafter) Associate Professor Lei Shao and his collaborators have published a research article titled “A wave-confining metasphere beamforming acoustic sensor for superior human-machine voice interaction” in Science Advances, a peer-reviewed multidisciplinary open-access scientific journal run by the American Association for the Advancement of Science. The article proposes a new acoustic sensor for human-machine interaction (HMI) to distinguish and track multiple sound sources in a noisy background, with an outstanding signal-to-noise ratio (SNR) and a superior sensitivity at the same time.

Figure 1. Image of the proposed sensor with added visual effects

Lei Shao and Professor Wenming Zhang from the SJTU School of Mechanical Engineering are corresponding authors of the paper. The first authors include doctoral student Kejing Ma from the mechanical engineering school and JI doctoral student Huyue Chen. The research work was funded by the National Natural Science Foundation of China, and the Science and Technology Cooperation Project of Shanghai.

Conversation is the most common and effortless way to communicate with people. Similarly, voice-based HMI with robust sound sensing, speech recognition, and emotion perception has also been expected as one direction for straightforward interactions with machines. However, all existing auditory systems, no matter commercial or newly-developed in a lab, are not able to achieve both high SNR and sensitivity in the daily phonetic frequencies, and amplification, separation and localization of multiple sound sources occurring simultaneously.

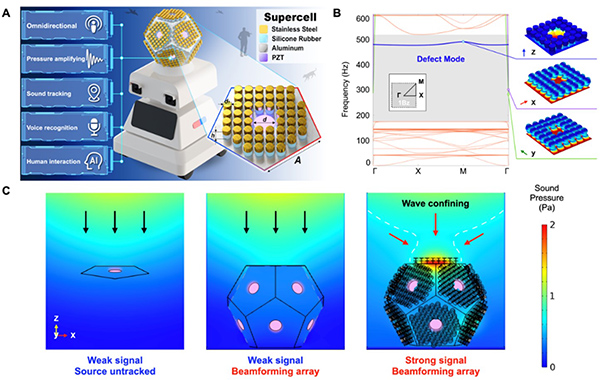

Figure 2. Design and principle of the proposed acoustic metasphere

This paper proposes a unibody acoustic metamaterial spherical shell with equidistant defected piezoelectric cavities, referred to as the metasphere beamforming acoustic sensor (MBAS). It demonstrates a wave-confining capability and low self-noise, simultaneously achieving an outstanding intrinsic signal-to-noise ratio and an ultrahigh sensitivity, with a range spanning the daily phonetic frequencies and omnidirectional beamforming for the localization of multiple sound sources. Moreover, the MBAS-based auditory system is shown for high-performance audio cloning, source localization, and speech recognition in a noisy environment without any signal enhancement, revealing its promising applications in various voice interaction systems.

Reference:https://www.science.org/doi/10.1126/sciadv.adc9230

Personal Profile

Lei Shao is currently an associate professor at the University of Michigan-Shanghai Jiao Tong University Joint Institute. He received his bachelor degree from Shanghai Jiao Tong University in 2009, master and doctorate degrees from University of Michigan in 2011 and 2014, respectively. Prior to joining JI, he was a postdoctoral researcher at the US National Institute of Standards and Technology from 2014 to 2018. Lei Shao’s research focuses on microelectromechanical systems (MEMS), sensors and actuators.

Lei Shao is currently an associate professor at the University of Michigan-Shanghai Jiao Tong University Joint Institute. He received his bachelor degree from Shanghai Jiao Tong University in 2009, master and doctorate degrees from University of Michigan in 2011 and 2014, respectively. Prior to joining JI, he was a postdoctoral researcher at the US National Institute of Standards and Technology from 2014 to 2018. Lei Shao’s research focuses on microelectromechanical systems (MEMS), sensors and actuators.